TL;DR

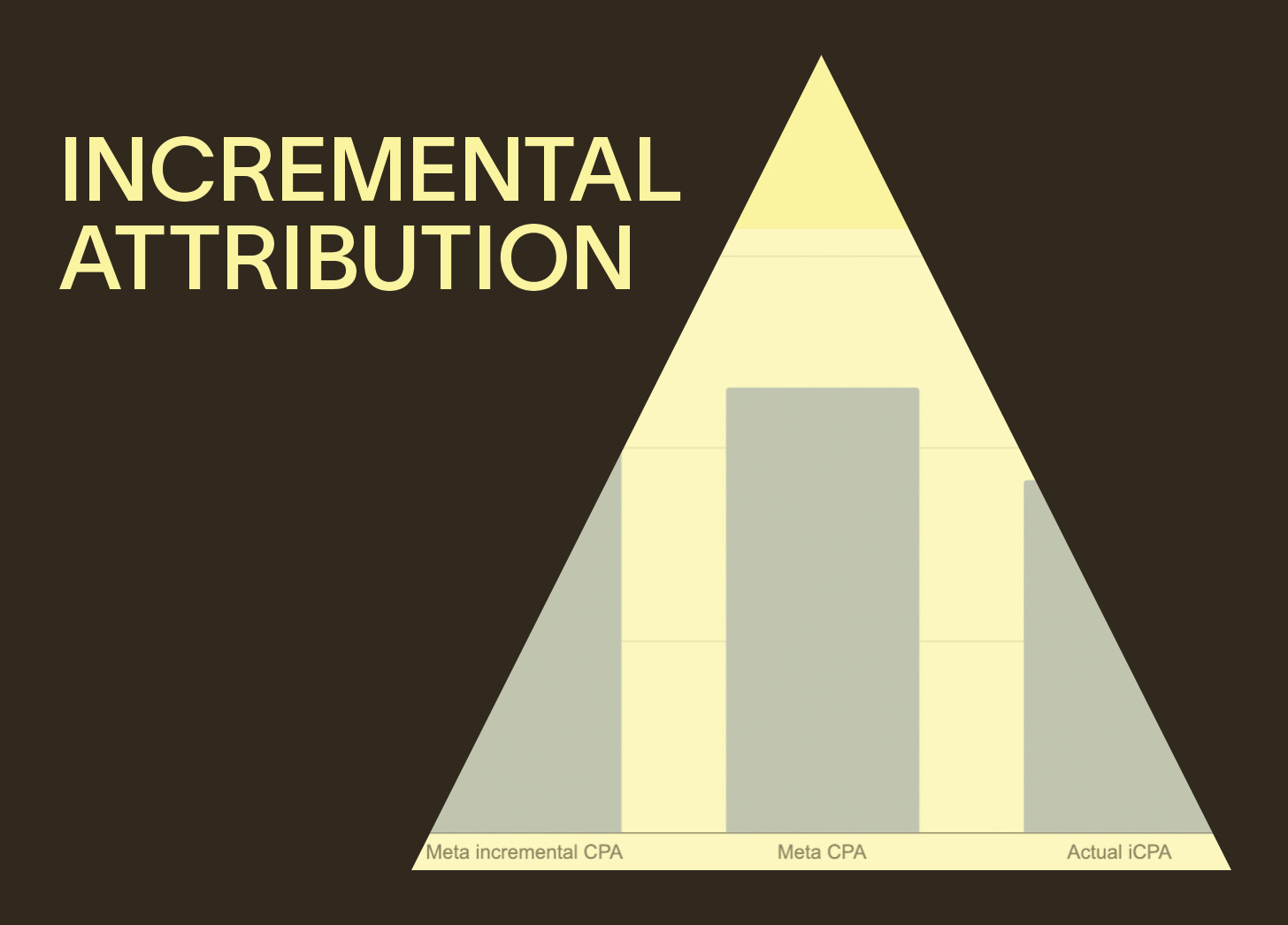

Alongside its click and view-based windows, Meta has introduced “incremental attribution” an attempt to help prove what ads are actually driving performance. Based on our own incrementality data, we’re not yet sold.

Incremental Attribution got everyone excited a month ago when it launched, but now a handful of experiments in, it’s still leaving som…