Perfectionism is killing your startup

Test, don't debate

Today, I want to talk about the cost of time and why testing is so much better value money than you think it is.

First, let me recount a conversation about an ad that took over 3 weeks of editing to go live.

“This ad isn’t going to work” the founder said.

“Well, we think there’s good reason why it could, but let’s test it,” I replied.

“So you’re not even sure”

“No – no-one can be sure, but we believe it could”

“It’s not right how it is, it needs more work.”

There are versions of this kind of conversation everywhere. With advertising and creative, there are long conversations about whether an ad is right or not. But it’s not just in advertising and creative, there are versions of this conversation that take place in every part of business.

There can be debates about which country to launch in, what product line to develop, which feature to introduce next, what software you want to bring into customer support, the list goes on.

Everyone in these environments have roughly the same motivation: they want their thing to be a success. But these debates come down to a different set of opinions on how that will work.

And most importantly separates those who hold probabilistic ways of seeing how businesses grow vs deterministic ones.

Probabilistic vs deterministic ways of thinking

Deterministic thinkers believe that actions cause direct outcomes. They love historic data and believe there’s ways to make sense of the future.

Probabilistic thinkers understand there is huge amount of uncertainty and recognise that there’s a range of possibilities based on actions.

The deterministic thinker in these debates is the one who often advocates strongly for the “right” way to do something. At a certain point something would have happened as a result of an action and therefore it shapes that world view. “Apple has exceptional design, that’s why it works – we must have exceptional design too.”

Startup growth is probabilistic

By the nature of building a startup, you are building something new without historic data. You might be category creating. Or you might be radically improving a category.

Your growth rates should be in 3-figure percent points every year for the first five years. There is no roadmap. There is no playbook. You have to learn everything for yourself. And the world around you is constantly changing.

Building and growing a startup is highly unpredictable.

Ad winners in particular are highly unpredictable

Lots of people will debate me on this, but here’s the things I personally know to be true:

We currently launch about 80-100 ad concepts every week

The majority of ad launches we deem as fails

No-one I have ever met can reliably predict what ad will be a winner

Despite this, based on other agency and startup data, we as an agency have a reasonably high “win” rate compared to others.

Within individual clients’ accounts, success rates improve over time as we start learning and so therefore

You can improve your success based on past data

Despite that, there is still a huge amount of randomness

Outsized winners – which make up the biggest growth levers – are usually the more unpredictable part of accounts

All of the above is why our creative strategy is first and foremost an experimentation strategy. The most important thing is hypothesis → test → learn, and we should aim to do that as fast as possible, document clearly, and refactor learnings as soon as we can.

We do all of this to increase the probability of finding a successful ad. But you can’t ever guarantee success. All we can do is attempt to increase the probability of success.

The decision then comes down to – at what point is it worth the cost of derisking vs the cost of testing.

The cost of deterministic thinking

I was fortunate that my first ever job was in an agency. In that job I started to see hours as billable units of time. I was a content marketer for that agency and while I wasn’t client-facing, I became astutely aware of the cost of time.

Every minute spent doing something costs money. That time can never be repurchased. There’s no refund on it. You can only spend it that way once.

And it’s not just about the direct cost of the business. Every person in the business is expected to generate a return for that business.

While it’s very easy to consider the cost of cash leaving your bank account to go to Meta, I find that no matter how smart the thinker, it is rare for people to think about the cost and potential return on an hour of time spent.

Customer development is the time well spent

As an agency we experiment all the time. Most are microexperiments – i.e., will this UGC style work for this client this week.

But we also run macro experiments – like “what approach to creative strategy should we take?” This becomes something we might broadly A/B test across our client roster where we’ll run one creative strategy method with half our clients and another with the other half.

These samples are much murkier and not pure experimentation but they constantly refine our process and thinking.

One agency-wide known we have is that the Jobs to be Done framework is highly valuable in creating good ads. We’ve landed on that from trying different frameworks (personas, to minimum viable strategy) and view that time spent learning about customers is valuable time.

Specifically talking to customers, understanding their problems, and how they dealt with those problems before they were customers of the brand in question. This for us is valuable time spent for all involved: if the brand runs the interviews and feeds back to us, or if we run the interviews as we do with our biggest clients.

What’s the value of a member of the team?

These are five of the top 15 fastest growing businesses in the UK according to the Sunday Times:

Rheal did £19.8m of revenue with ~ 40 employees = £495k per employee

Trip did £20m of revenue with ~ 176 employees = £113k per employee

Ancient + Brave did £10.2m revenue with ~67 employees = £152k per employee

Purdy and Figg did £18.3m revenue with ~69 employees = £265k per employee

Simmer did £7.4m revenue with 57 employees = £129k per employee

Let’s assume there’s a £45k average salary, then 2.5-11x is a reasonable revenue return per employee’s cost when averaged out.

The costs of debating and testing

There’s a handful of things we need to consider here: the costs, and the potential outcomes.

Let’s run some assumptions.

At Ballpoint, we see ads in three categories: losers, maintainers, and winners.

Losers are ads where the CPA is unsustainably higher than the profitability target

Maintainers are where the CPA is in line with the profitability target for the current level of scale

Rocketship winners are where the is a strong improvement of CPA and therefore potential scale.

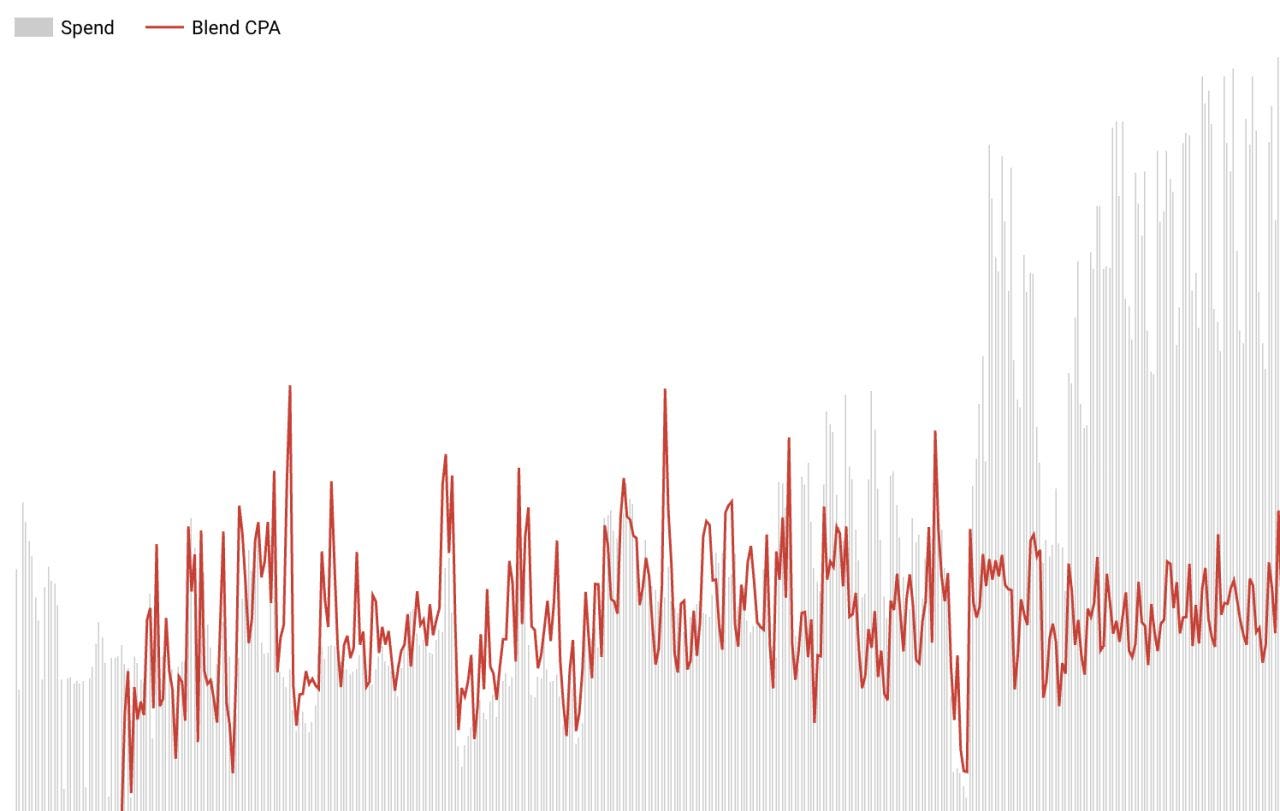

What does this look like charted?

In this example, you can see the huge impact that rocketship winners can have. This is real client data where there’s three distinct phases of work:

Spend + CPA are correlated - i.e. we’re unable to scale as we’re learning

We start to find some light winners that allow us to have bigger spend without a big shift in CPA (maintainers)

We then land on rocketship winners which allow us to keep CPA as is, but over triple our daily spend within a very short timeframe.

Now, with this in mind, let’s have a think about what this looks like month-to-month in practice.

Let’s create an example, let’s assume we have an account tracking £50k per month of spend with a £10k per month test budget.

We run 16 creative concept tests per month or £625 of media spend per cost of ad created.

Our gross profit margin on ad spend is 60% (the 2.5:1 LTV:CAC ratio).

Let’s assume we’ve got 42 ads currently live.

Our outcomes are:

Loser –

55% of the time

Profit = £625 x 60% = £375

Maintainer -

43% of the time

Joins the winning ad pool, and for simplicity gets 1/42th of the ad spend = £952/month.

Profit in month one = £952 x 60% = £571

Assume a lifespan of 2 months

Total profit = 2x £571 = £1,142 plus the initial test profit of £375

Total profit = £1,517

Rocketship winners

2% of the time

Allows us to increase monthly spend to £75k/month or £60k of hero spend, as we also increase test budget to maintain 20% of spend

This now has £1,429 / month of spend per ad (£60k/42 – again simplistic)

Lifespan of rocketships is longer, assume 4 months

Ongoing profit becomes 4x £857 = £3,428 + initial test of £375 =

Total profit = £3,803

But it also increases the budget of the entire system, which means we add £12k/month more uplift permanently.

That means over 12 months there’s a total of £144k additional profit in our system.

Total winner value is now £3,803 for the individual ad + £144k for the whole system =

£147,803 profit

What’s the Expected Value of this ad then?

Based purely in the media spend of £625 per ad the EV of an ad test is £3,814.

For those who are unfamiliar, Expected Value is a term borrowed from probability theory. Expected value is the average result you’d expect if you repeated a decision or bet many times — like the long-term payoff of a choice. It’s really big in poker as it helps you understand the different outcomes of results.

It’s something we use increasingly at Ballpoint to help make decisions where it might otherwise look quite tricky.

The cost of debate

Let’s assume some costs here:

Startup founder:

1x hours of time editing and giving feedback

£100k salary

Hourly rate of £77

2.5 expected return on time

£192 of cost

Startup Head of Growth

1x hours of time

£90k salary

£70 hourly rate

£175 of cost

Marketing manager

1x hours of time

£50k salary

£39 hourly rate

£95 of cost

Those 3 people spending 1 hours on editing and feedback creates £462 of extra cost.

Assume 3 scenarios here:

Scenario A is quick sign-off (maybe just 20minutes of sign off from all parties)

Scenario B is long sign off = £462 of cost

Scenario C is huge levels of cost = £1.3k cost

Our EV is £3.8k

Each scenario now nets:

A = 4.73x return

B = 3.08x return

C = 1.3x return

Say the cost of creating and running all your ads for the month is £6k.

That now adjusts the returns to:

A = 2.86x return

B = 2.04x return

C = 0.93x return

Now, the high level of debate means we’re losing money in the whole system – even if you achieve the same rate of success, you’re going to be losing money.

How to increase our chance of success

But I hear you cry: “if we debate this for longer and increase the time spent on it, we’ll increase the chance of it winning.” This is true, if we doubled the rate of winner, the EV goes positive.

But this is where the issue falls down.

Rocketships are almost impossible to predict

This is where we have to come back to my list of knowns from above.

The experimentation process is very good at improving the ratio between fails and maintainers. I.e. it is good at refining our ‘what’s working’ ads that make up large volumes of day to day performance.

The gains made to probability of finding a rocketship winner however are much lower and harder to quantify. When we’re talking about 1 concept in 100 or 1 concept in 50 being a step changer, the sample sizes aren’t that good at really revealing if we’re able to improve that rate or if it’s just down to chance. (I.e. the actual rocketship probability is 1-5% as a range).

Rocketships are hard to predict. We’ve seen the following be rocketships:

Ugly UGC mashups

Straight UGC videos

Post-it statics

Review statics

Heavily branded long form video

BTS video

Product shots

I.e. ads. We’ve seen all ad types have the chance of being rocketships.

And from a messaging perspective, we’ve seen rocketships be problem aware or solution aware or product aware. Again, there’s not a reliable framework for the rocketship.

With many of our clients we engage with some light touch “which ad you bet will win?” This is an exercise that almost always elicits losers. At the start its the “unexpected ads” and then people start betting against their gut and then the conventional ad might win the time after.

As I highlighted at the start, some of the best use of time that we’ve found is customer development. Hours spend talking to customers, consolidating that learning, and then having creative strategists play around with that research is the best activity we’ve found for increasing probability of finding maintainers and winners.

The ever-looming runway

In certain environments, I can see the logic for investing more time upfront. Not just in customer development, but also for what the ad visual looks like or how the branding is represented.

I am sure that there is a way to increase the 1-5% chance of finding rocketships to maybe 3-10%, and you can do that by investing more time upfront. The EV of a 10% chance of a rocketship certainly makes the time investment pay off.

But – there is the ever-looming runway.

In my experience the difference between the ‘just test it’ and the ‘let’s get this right’ teams is stark. Because if you’re the deterministic / let’s get this right team, then that will extend to your entire team philosophy. It won’t just be ads that this applies to but every part of how you run your startup.

The cultures that follow deterministic thinking end up taking weeks to make decisions on things like ads. There’s back and forth, then something else comes up. It gets passed on to someone else to edit and amend, delays happen, and so on.

Similarly, the probabilistic team cultures see that permeate the entire business. When things to become discussions and debates, there is usually a decision made quickly to test it. Maybe it’s not a perfect decision, but it’s good enough. And then the founders, the growth leads, the operational leads, whoever it is, move on to the next thing.

The cultures that follow probabilistic thinking end up taking minutes to make decisions on things like ads. Decisions are made, sign offs are quick, ads go live. The test is under way.

Rocketship winners are always needed to run successful businesses. In early days, they’re needed to find product channel fit – without your first rocketship, you’re stuck pushing out ads that don’t quite break even for you or make money for you.

Beyond those early days, it’s how you continually unlock new levels of scale. Most businesses won’t survive on £20k of ad spend a month as the returns won’t hit break-even in your P&L. You need to get to higher levels of ad spend.

Based on a 1-in-50 chance of finding a rocketship, if you can test 4 things per week, you’ll find a rocketship every 3 months.

Let’s imagine though that long debate increased your chance of success by a factor of 2 but at the expense of testing speed and you’re now only launching 4 concepts per month. Your time-to-rocketship is now 6 months. For many startups, by month 6, it will already be the beginning of game over. There’s just not that much runway to correct after this point.

Growing a company is one of the hardest things you can do.

Really killing it with ads is insanely hard. The system that drives ad growth is incredibly complex.

I’ve offered a highly simplified version of what’s at stake here. But I’ve done this to illustrate a way to think about testing over debating. There are lots of ways to run a business. Not every business is investor-backed. Not every business is operating on a model that requires continual growth or requires ads to create the entire sustainable business for them.

If you’ve got a highly successful other business unit (maybe wholesale or other offline dtc) then you can afford to take a different approach to thinking about growth.

But if I was to run a brand where digital DTC or ecom was likely to be the biggest growth lever, then I’d be operating on the probabilistic/test side as much as possible. In my experience, the faster you can get learning through the system, the better your overall outcome.

I write a weekly newsletter focused on startup growth. I currently run an agency where we manage over £15m of ad spend across some of the most exciting new companies around. Before I did this, I was a startup founder of my own brand Wine List, I was the first hire and head of growth at Thriva, and on a fractional based was the most senior growth lead at Hims UK, Fy! and Ferly.

Subjects that excite me: incrementality, customer psychology, measurement, technology.

If you were forwarded this maybe you’d like to read it every week! Give us a follow.

🔗 When you’re ready, here’s how Ballpoint can help you

→ Profitably grow paid social spend from £30k/m → £300k/m

→ Create full funnel, jobs to be done-focused creative: Meta, TikTok, YouTube

→ Improve your conversion rate with landing pages and fully managed CRO

→ Maximise LTV through strategic retention and CRM - not just sending out your emails

Email me – or visit Ballpoint to find out more.

NB: We support brands spending above £20k/month.

❤️🔥 Subscribe to our Substack to learn how to grow yourself

… because agencies aren’t for everyone, but our mission is to help all exciting challenger brands succeed and so we give away learnings, advice, how-tos, and reflections on the industry every week here in Early Stage Growth.