The ASC playbook we're using for £60m of revenue in 2026 (follow along as we test it)

I'll share the results as we go, but here's the starting point

In mid 2025, Haus released its meta-study of Meta ads1. The finding that stuck with me:

ASC performed 9% better than Manual campaigns at experiment midpoint. But 12% worse by the end.

In other words: ASC was quick to pick up low-hanging fruit, but had lower incrementality over time.

This matched what we were seeing. And it raised an obvious question: should we be running more Manual campaigns?

A year ago, we published our outlook and the answer was yes. Manual campaigns drove better CPA, but ASC helped with incremental volume. A handful of growth people messaged afterwards to say they’d seen the same.

Then, 30 days later, Meta made the decision for us.

I noticed one account had gone ASC-only. Spoke to our account reps. They told us the change was permanent.

Today, the idea of running Manual-only feels outdated. But the transition wasn’t smooth. We made mistakes. And we’re still learning.

Here’s what £20m of ad spend taught us about ASC in 2025, what we got wrong, and what we’re testing in 2026.

Sections:

The background on ASC

What is the new ASC (nASC) format?

What £20m of spend reveals about ASC in 2025

Where we went wrong

Account structures we’re using for 2026

What we’re testing next

The background on ASC

Advantage+ Shopping Campaigns were introduced in 2022. At a high level, this shift was a good one.

Until 2022, the role of the media buyer was often:

Browse the ad account multiple times per day

Identify audience segments to push ads to

Identify the ideal ad mix for certain audiences

Test messaging specific for those audiences

Adjust budgets between audiences and creatives to scale

Play whack-a-mole between all of the above

Meta realised that process could be better served with AI. If on some days, certain creatives with specific messages are working with different audiences, it makes sense to lean into those opportunities automatically. On the face of it, ASC should always have been more efficient.

And when we first tested ASC, it was. Maybe it was first-mover arbitrage, but we saw huge and immediate gains.

By January 2025, however, those gains had either narrowed or reversed.

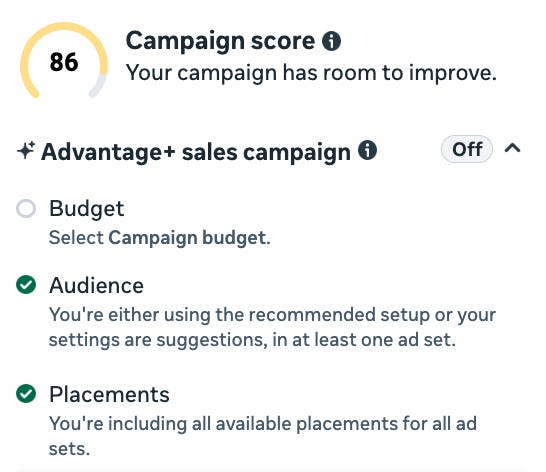

All well and good if we still have the option to choose. But in February, Meta started rolling out new ASC formats.

What is the new ASC format (nASC)?

Original ASC had just one ad set per campaign and could take up to 150 ads. It purposefully removed options around audiences, placements, and locations.

New ASC allows multiple ad sets. It also allows far more customisation. It re-introduced a lot of the Manual campaign features that many complained had gone.

But for the most part, even with moderate changes, it remains an “ASC.”

The biggest driver today of if something will be an ASC or not is whether you’re using CBO or ABO. As soon as you push ABO (which many will do for creative testing) it goes into Manual.

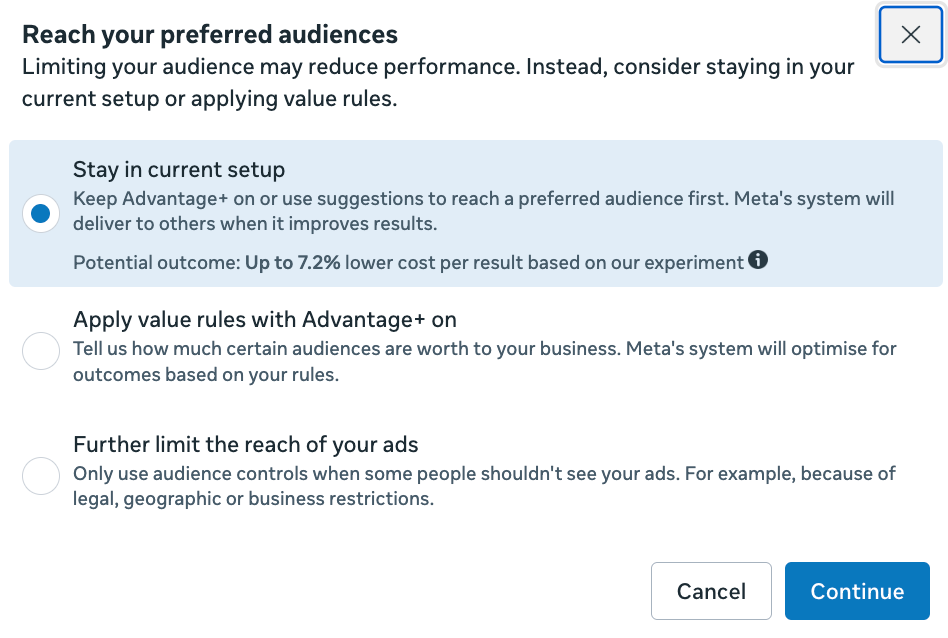

With Audiences, we’re now prompted on what the options are:

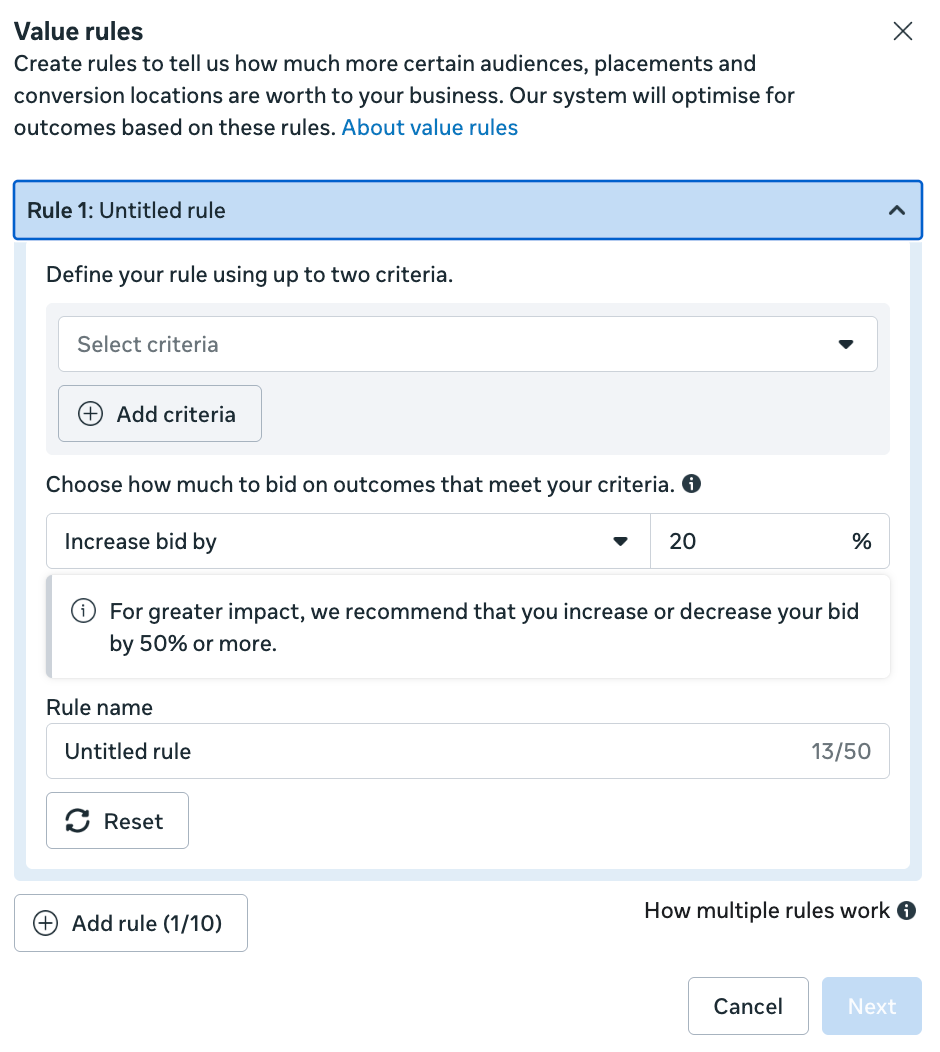

Here the “value rules” – which used to be called bid modifiers in the API – allow you to stay inside ASC while still inferring a little more data:

In practice, even with option three its difficult to hit Manual again. Even if you were to target 57-61 year old women who live in Portsmouth who are into both fishing and British banking, Meta still serves it under the ASC banner.

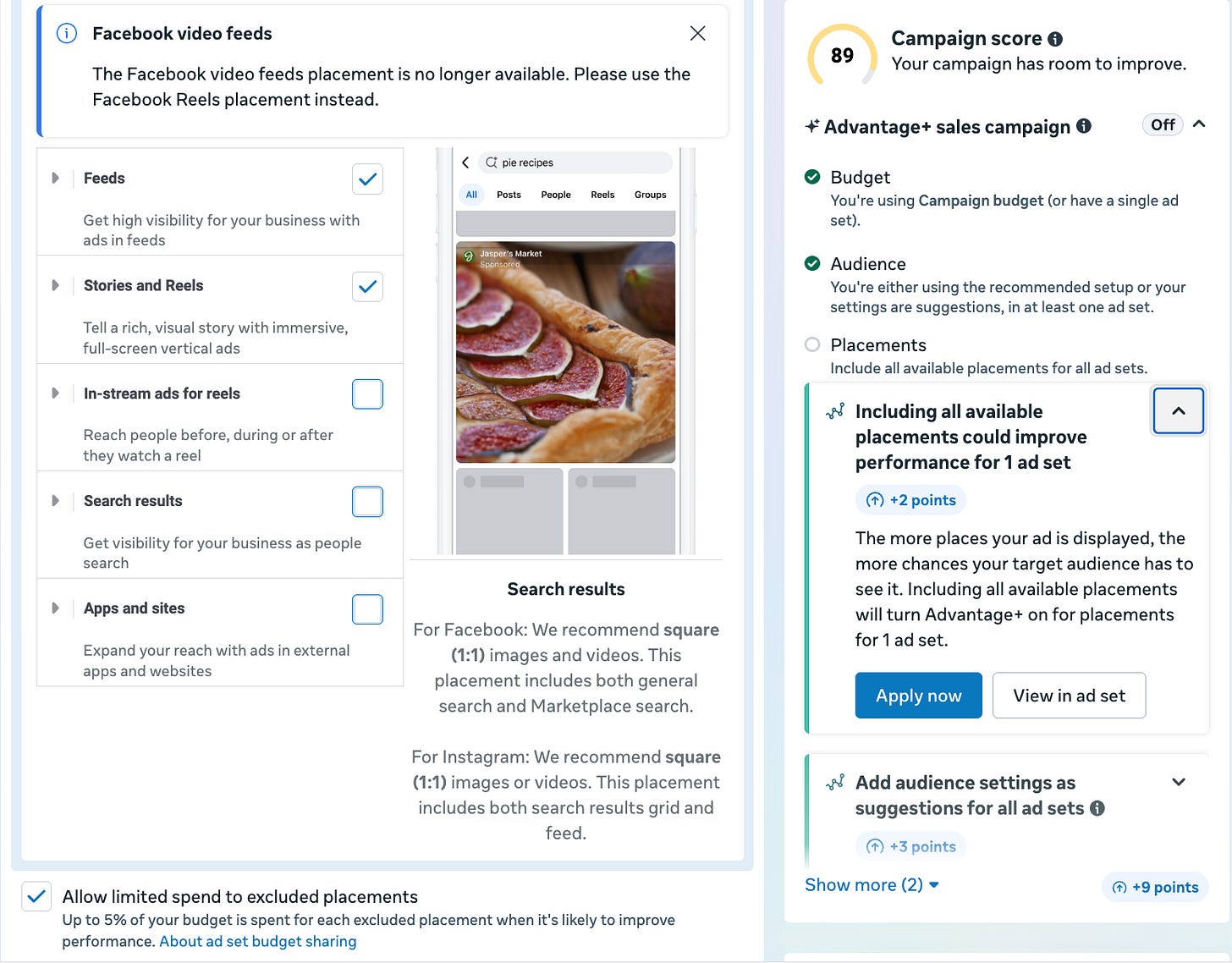

Placements, however, are a different story. Remove some placements and you fall into Manual mode again. And not only that, even in this setup, you still have ‘limited spend’ being exposed to those excluded placements by default.

All in all, most of the time so long as you’re running CBO, you’re going to be running ASC.

What £20m of ad spend reveals about ASC across 2025

Share of spend

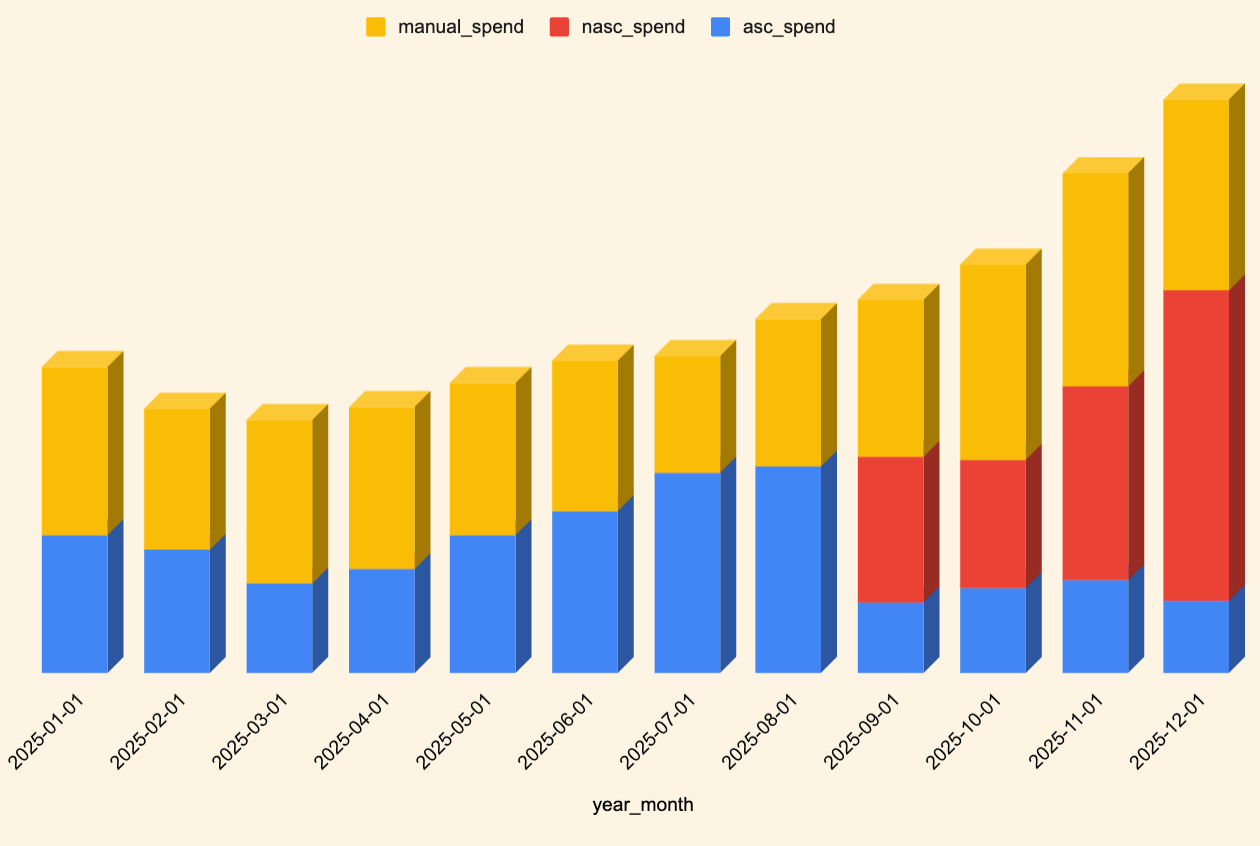

We managed just shy of £20m of ad spend last year. Throughout that, we battled with ideal setups.

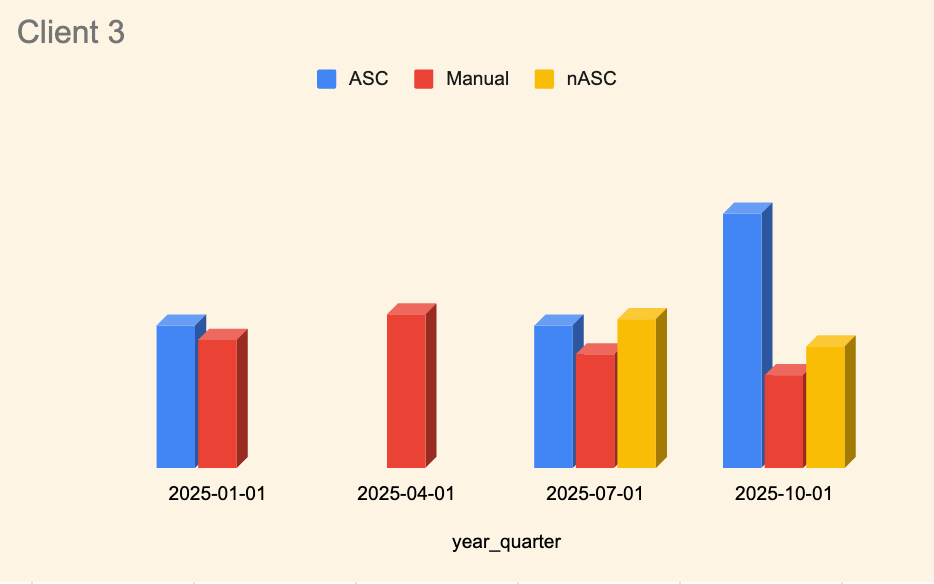

Here’s how spend evolved over time:

By Q3, we decided to start labelling our campaigns differently and you can see how we introduced "nASC" as a new format choice. Working backwards, we know that ratio held running back until about May.

How did CPA change throughout the year?

Spend shifted in part because of Meta forcing out the new campaign structure. But how did performance change with it?

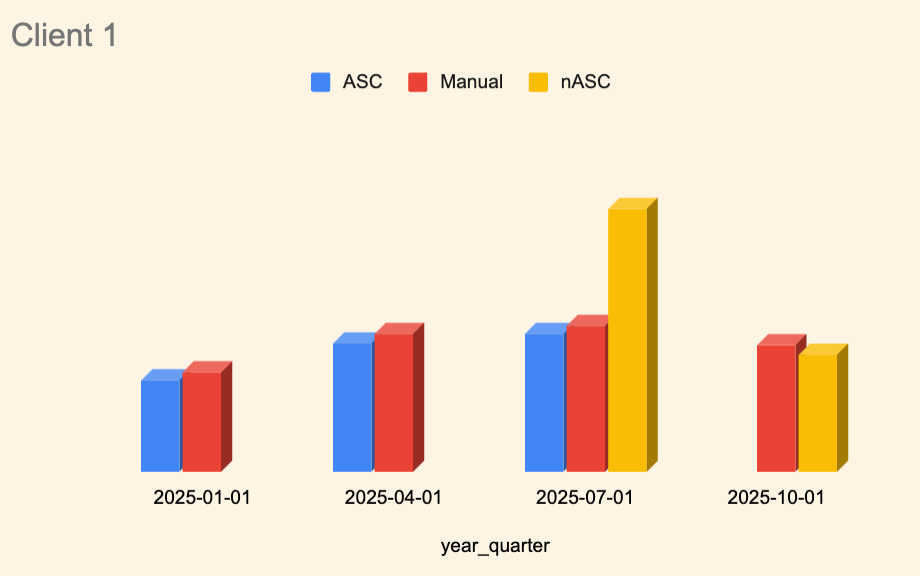

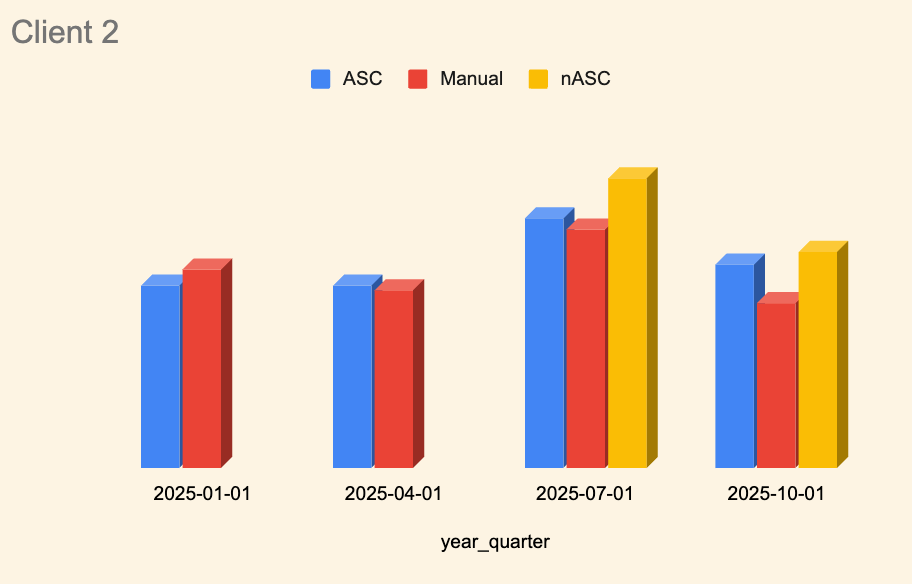

Here are three client examples of CPA over time. These show three stories that are representative of the client base as a whole.

In this example, we properly started testing the nASC format in Q3. First month and CPAs were almost double what they’d been in Q2.

With this client, old ASC was always cheaper than the alternatives.

But importantly in Q4, when we went full nASC/Manual (and no old format ASC), nASC beat Manual out.

With this client we saw a similar pattern: the introduction of nASC immediately increased CPA (in an already higher CPA period in Q3). While Q4 saw all CPAs reduce, the comparative performance remained the same.

With client 3, we actually switched to Manual only in Q2, while introducing both old ASC and nASC back into rotation by Q3.

The CPA gaps with client three were closer from the off, and by the time we got into Q4 the CPA spikes in old school ASCs were so big we turned it off completely. That said, Manual campaigns still won.

I write a 7-minute read for Substack every week. We manage £20m of ad spend per year, I’ve helped grow half a dozen businesses from £0 to £10m, and have built creative engines that scale for 9-figure consumer brands. Before I ran Ballpoint, I was a DTC founder and ex-operator.

If you’d like the entire archive of our learnings, consider a paid subscription. You get:

Entire archive of this Substack

Invite to the Ballpoint Summer Party

Early access to all Ballpoint resources, reports and downloads

OFFER ENDS: 31st January

Where we went wrong

Looking back, we made two mistakes.

First, we held onto old format ASC for too long.

We liked old format ASC for two reasons: performance on average was better, and the 150 ad limit was useful for scale. So we kept reactivating old campaigns rather than committing to learning the new format.

This made sense in isolation. But it meant we were slower to build conviction in nASC than we should have been.

Second, we avoided a proper learning phase because CPAs were already high.

By Q2, CPAs were escalating across several accounts. The instinct was to protect performance – not run experiments that might make things worse.

But this was probably chicken and egg. We didn’t invest in learning nASC because CPAs were high. But CPAs may have stayed high because we didn’t invest in learning nASC.

By Q3 we changed approach and prioritised new learnings. It took a quarter longer than it should have.

The lesson: when Meta forces a structural change, the cost of delaying adaptation usually outweighs the cost of short-term inefficiency.

Account structures we’re using for 2026

With that context, here’s how we’re structuring accounts today.

In our biggest accounts, nASC is now performing well.

And while Manual CPAs are outperforming – that’s usually because we run our creative testing in Manual on ABO. Creative testing has lower CPA by default because we’re not (usually) scaling within it. And we know that every channel, every campaign, every ad suffers from diminishing returns – as you scale, CPA goes up.

Steal this structure if you need a reset.

The typical setup today is as follows:

Hero nASC campaign

With one of the following setups depending on size:(SMALL) 1 ad set with all best performing assets (usually 3-10 assets/ad set)

(MIDSIZE) 2 ad sets, often grouped by static/video (usually 5-25 assets/ad set)

(LARGE – SINGLE PRODUCT) 4 ad sets, grouped by Problem Awareness Stage (usually 10-50 assets/ad set)

(LARGE – LARGE CATALOGUE) 1x ad set per AOV bracket or product category (usually 5-50 assets/ad set)

Creative Testing Manual campaign

We test a lot of structures here. But the default remains:ABO

1 ad set per concept

2-5 assets per ad set

Old Format ASC (scale periods)

During Christmas and peak, we turned back on a few of the old format ASC historic winners for additional scale. In these circumstances these were seen as ad hoc pushes with the setup usually:100-150 ads per ad set

What we’re testing next

We’re not done learning. Here’s what we’re actively experimenting with in Q1:

Number of ads per ad set. We’ve historically run 3-50 depending on account size. But we’re seeing signals that fewer ads with higher quality might outperform volume. Testing tighter ad sets (3-5 assets) against larger ones.

Types of exclusions. Placement exclusions push you into Manual mode – but what about audience exclusions within nASC? We’re testing excluding website visitors, ad engagers, and more to see impact.

Minimum budget percentages. nASC allows you to set minimum spend allocation per ad set. We’re testing whether forcing budget floors improves or hurts learning.

Bid caps by campaign type. Different bid cap strategies for partnership ads, best performers, and creative testing. Early hypothesis: tighter caps on proven winners, looser on testing.

I’ll share what we find as results come in.

The importance of being agile

Meta’s product development engine has really ramped up. In the late 2010s, software updates were few and far between, and announcements around them usually shrouded in darkness.

Over the last 18 months, as we highlighted in our Meta Ads in 2026 Report, has seen four of the most substantive algorithm updates we’ve ever had. This is excluding the dozens of smaller updates they’re pushing throughout the year.

When we published our ASC report last year, lots got in touch to say they were switching to Manual only setups – only for Meta to force nASC on us a month later.

It’s important in times like this to be agile. It’s important to ensure that you are always experimenting. And that you periodically re-test things that were fails before.

It’s also important to methodically think through advice you get. (That includes advice coming from me.) As just three client examples above show, the breadth of difference is big.

Treat every account like its own entity, and while there is ‘best practice’, that is not the only practice.

If you need help thinking through ASC setups, or experimentation more broadly, let me know.

Always happy to help.

Thanks for reading.

Josh

Josh Lachkovic is the founder of Ballpoint, a growth marketing agency that helps brands scale from £1-20M ARR. Visit Ballpoint to learn more, or subscribe to Early Stage Growth for weekly insights on profitable scaling.

Note: Ballpoint works with brands looking to grow their spend from £30k per month to £300k per month.

https://www.haus.io/blog/the-meta-report-lessons-from-640-haus-incrementality-experiments

Really solid breakdown of experimentation under constraint. The point about delaying adaptation beacuse of high CPAs being chicken-and-egg is something I've seen with every major platform shift. You're basically paying tuition either way, might as well pay it early when the learning compounds. I dunno though, curious if you've considered running mini-scale parallel tests during high CPA periods rather than commiting the whole account budget?